How vm.min_free_kbytes works

Memory allocations may be needed by the system in order to ensure proper functioning of the system itself. If the kernel allows all memory to be allocated it might struggle when needing memory for regular operations to keep the OS running smoothly. That is why the kernel provides the tunable vm.min_free_kbytes. The tunable will force the kernel’s memory manager to keep at least X amount of free memory. Here is the official definition from the linux kernel documentation: “This is used to force the Linux VM to keep a minimum number of kilobytes free. The VM uses this number to compute a watermark[WMARK_MIN] value for each lowmem zone in the system. Each lowmem zone gets a number of reserved free pages based proportionally on its size. Some minimal amount of memory is needed to satisfy PF_MEMALLOC allocations; if you set this to lower than 1024KB, your system will become subtly broken, and prone to deadlock under high loads. Setting this too high will OOM your machine instantly.“

Validating vm.min_free_kbytes Works

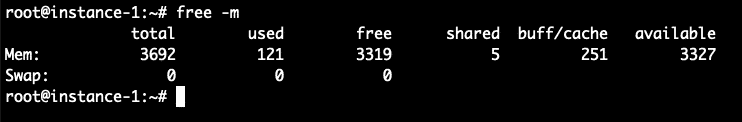

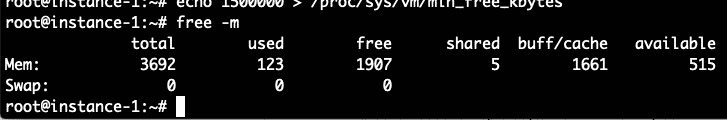

In order to test that the setting of min_free_kbytes is working as designed, I have created a linux virtual instance with only 3.75 GB of RAM. Use the free command below to analyze the system:

Looking at the free memory utility above using the -m flag to have the values printed in MB. The total memory is 3.5 to 3.75 GB of memory. 121 MB of memory is used, 3.3 GB of memory is free, 251 MB is used by the buffer cache. And 3.3 GB of memory is available.

Now we are going to change the value of vm.min_free_kbytes and see what the impact is on the system memory. We will echo the new value to the proc virtual filesystem to change the kernel parameter value as per below:

# sysctl vm.min_free_kbytes

You can see that the parameter was changed to 1.5 GB approximately and has taken effect. Now let’s use the free command again to see any changes recognized by the system.

The free memory and the buffer cache are unchanged by the command, but the amount of memory displayed as available has been reduced from 3327 to 1222 MB. Which is an approximate reduction of the change in the parameter to 1.5 GB min free memory.

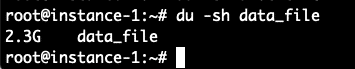

Now let’s create a 2GB data file and then see what reading that file into the buffer cache does to the values. Here is how to create a 2GB data file in 2 lines of bash script below. The script will generate a 35MB random file using the dd command and then copy it 70 times into a new data_file output:

# for i in `seq 1 70`; do echo $i; cat /root/d1.txt >> /root/data_file; done

Let’s read the file and ignore the contents by reading and redirecting the file to /dev/null as per below:

Ok, what has happened to our system memory with this set of maneuvers, let’s check it now:

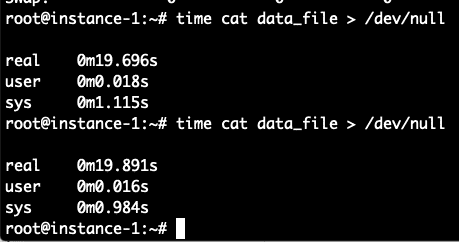

Analyzing the results above. We still have 1.8 GB of free memory so the kernel has protected a large chunk of memory as reserved because of our min_free_kbytes setting. The buffer cache has used 1691 MB, which is less than the total size of our data file which is 2.3 GB. Apparently the entire data_file could not be stored in cache due to the lack of available memory to use for the buffer cache. We can validate that the entire file is not stored in cache but timing the repeated attempts to read the file. If it was cached, it would take a fraction of a second to read the file. Let’s try it.

# time cat data_file > /dev/null

The file read took almost 20 seconds which implies its almost certainly not all cached.

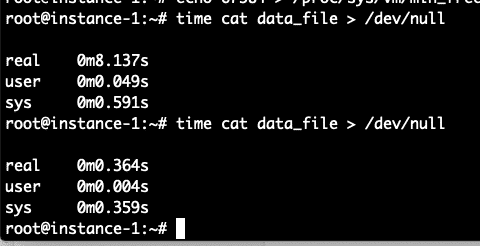

As one final validation let’s reduce the vm.min_free_kbytes to allow the page cache to have more room to operate and we can expect to see the cache working and the file read getting much faster.

# time cat data_file > /dev/null

# time cat data_file > /dev/null

With the extra memory available for caching the file read time dropped from 20 seconds before to .364 seconds with it all in cache.

I am curious to do another experiment. What happens with malloc calls to allocate memory from a C program in the face of this really high vm.min_free_kbytes setting. Will it fail the malloc? Will the system die? First reset the the vm.min_free_kbytes setting to the really high value to resume our experiments:

Let’s look again at our free memory:

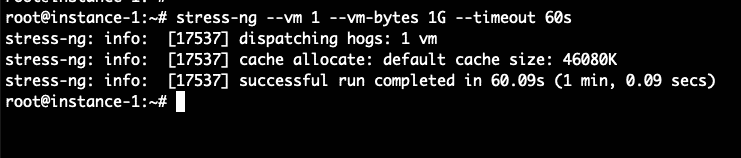

Theoretically we have 1.9 GB free and 515 MB available. Let’s use a stress test program called stress-ng in order to use some memory and see where we fail. We will use the vm tester and try to allocate 1 GB of memory. Since we have only reserved 1.5 GB on a 3.75 GB system, i guess this should work.

stress-ng: info: [17537] dispatching hogs: 1 vm

stress-ng: info: [17537] cache allocate: default cache size: 46080K

stress-ng: info: [17537] successful run completed in 60.09s (1 min, 0.09 secs)

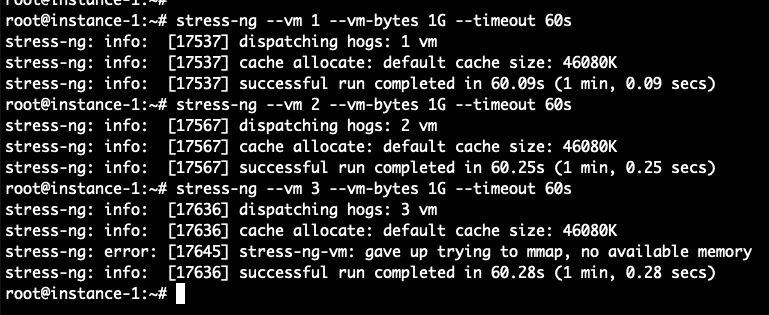

# stress-ng --vm 2 --vm-bytes 1G --timeout 60s

# stress-ng --vm 3 --vm-bytes 1G --timeout 60s

Let’s try it again with more workers, we can try 1, 2, 3, 4 workers and at some point it should fail. In my test it passed with 1 and 2 workers but failed with 3 workers.

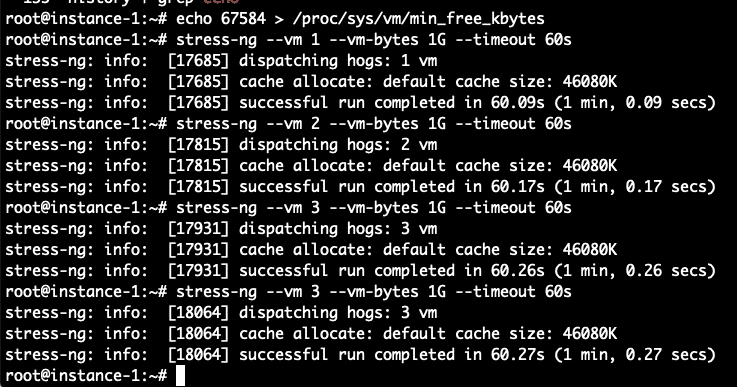

Let’s reset the vm.min_free_kbytes to a low number and see if that helps us run 3 memory stressors with 1GB each on a 3.75GB system.

# stress-ng --vm 3 --vm-bytes 1G --timeout 60s

This time it ran successfully without error, i tried it two times without problems. So I can conclude there is a behavioral difference of having more memory available for malloc, when the vm.min_free_kbytes value is set to a lower value.

Default setting for vm.min_free_kbytes

The default value for the setting on my system is 67584 which is about 1.8% of RAM on the system or 64 MB. For safety reasons on a heavily thrashed system i would tend to increase it a bit perhaps to 128MB to allow for more reserved free memory, however for average usage the default value seems sensible enough. The official documentation warns about making the value too high. Setting it to 5 or 10% of the system RAM is probably not the intended usage of the setting, and is too high.

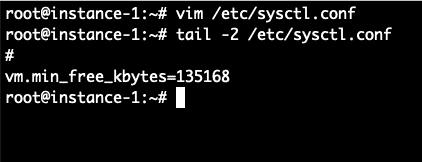

Setting vm.min_free_kbytes to survive reboots

In order to ensure the setting can survive reboots and is not restored to the default values when rebooting be sure to make the sysctl setting persistent by by putting the desired new value in the /etc/sysctl.conf file.

Conclusion

We have seen that the vm.min_free_kbytes linux kernel tunable can be modified and can reserve memory on the system in order to ensure the system is more stable especially during heavy usage and heavy memory allocations. The default settings might be a little too low, especially on high memory systems and should be considered to be increased carefully. We have seen that the memory reserved by this tunable prevents the OS cache from using all the memory and also prevents some malloc operations from using all the memory too.